COVID: Using standardised tests to baseline pupils this term

With pupils returning to school after what for many has been several months away from the classroom, teachers are understandably wondering how best to assess them on return, to measure their attainment and gauge how much they have been affected by the break in learning. It is therefore no surprise that many schools are considering using standardised tests to baseline pupils early this term.

Standardised tests are a useful and effective assessment tool: they are unbiased, relatively quick to administer and mark, and provide an external, national reference. If teachers want to assess pupils' attainment and any drop in learning, then carrying out a standardised tests in reading and maths from the likes of NFER, Hodder (PUMA/PIRA, NTS), GL, or Renaissance (STAR) makes sense. As with any test there are, of course, limitations. Test scores are noisy and can fluctuate depending on when the test is taken and the pupil's state of mind - they can have good or bad days. We must, therefore, be cautious reading too much into changes in score over time and should never subtract one score from another and treat the result as an accurate measure of progress. We must also be aware of the focus and content of the test: perhaps a child fared better or worse on a test because it had more questions of a certain type or on a certain topic (comparing scores from different test providers is particularly problematic). And finally, the conditions under which the test was taken are important - we cannot compare classes across a school or schools across a MAT unless the test conditions themselves are standardised. It is worth noting that the sample population against which a school's cohort is compared, will have taken the test under strict conditions.

But this year we have additional issues to consider. It is most likely that schools will have chosen to use tests intended for the end of the previous year i.e. summer 2019/20. For example, a school decides to use the year 3 summer PUMA test for its new year 4 pupils. This makes sense, and is probably a school's best option, but there are two key factors to consider when interpreting the results:

1) The standardisation of the test scores will have been carried out on a cohort of year 3 pupils in the summer term, not on a cohort of year 4 pupils in the autumn term. By using last year's summer tests, the school is not strictly comparing like-for-like because they are not using them at the intended point in time. As there is often a drop in learning over the summer, we might expect new year 4 pupils to gain lower scores than they would have if they'd taken them at the end of the previous year. Ideally, if scores are to be really reliable, test providers could re-purpose their end of year tests by re-standardising them against samples of the early autumn cohort. Obviously, under the circumstances, this is not possible.

2) The other issue, which is probably not as critical as the one above, is that the scores are based on a comparison of a pupil's result against a national sample unaffected by the loss of learning caused by lockdown. The score is therefore telling you how a pupil compares to a 'normal' population rather than the COVID-affected cohort of 2020. Taking the example given above, a summer year 3 test taken by new year 4 pupils will tell you how your year 4 pupils compare against end of year 3 pupils in normal times; it will not tell you how you year 4 pupils compare to other COVID-affected year 4 pupils nationally. It will give you some idea of how far below they are (if at all), but will not tell you if that is unusual under the given circumstances.

To summarise, the best baseline option is probably to use last summer's standardised tests but there are caveats. It may be best to focus more on the answers of the test and take the scores with a pinch of salt.

Adding baseline data to Insight

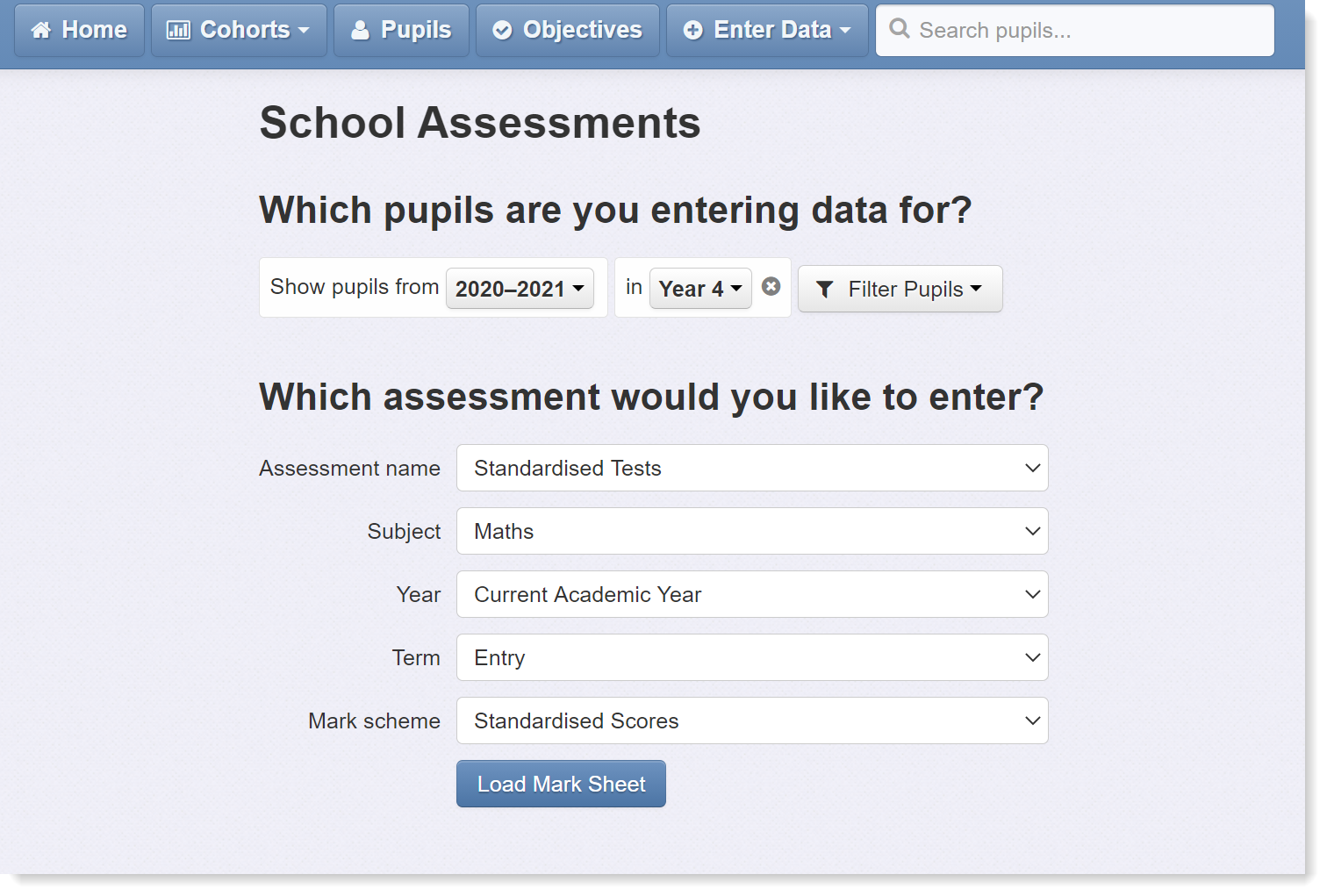

We recommend adding the data for the term in which the assessment was made e.g. 'Entry' or 'Autumn 1', rather than the term for which the test was intended. In the case of new Year 4 pupils taking summer Year 3 maths tests, for example, the data would therefore be entered as Year 4 assessments.

To enter the data, first click on the Enter Data button, and select School Assessments, which is the top option in the list. Then select the following options from the dropdowns provided:

This will bring up the mark sheet where you can enter the pupils' score.

Using the year prefix when entering standardised scores

In Insight it is possible to add a prefix to show the year that the test was intended for. This would be done in the case of, for example, a Y5 pupil taking a year 3 test. In such a scenario, the school would enter Y3 in front of the score - e.g. Y3 97 - to denote that the score relates to a previous year's test.

Should schools use the year prefix for baseline tests scores this term?

When entering baseline scores this term, where pupils are taking a previous year's summer test for example, we do not recommend using the prefix. As these pupils are close in age to the intended target audience of the test - e.g. new Year 4 pupils are close in age to end Year 3 pupils - it is best to treat the test as age appropriate and to enter the scores without the prefix. Adding the prefix will categorise all pupils as 'below' and the reality is probably more subtle than that.